LiDAR, which stands for Light Detection and Ranging, is a sensor technology that sends light as a pulsed laser to measure ranges (variable distances) to the objects around it. Today, it is a crucial technology for a host of cutting-edge applications. But with a plethora of products on offer, it is sometimes quite cumbersome to select the right one for your application. How does one differentiate between all the different types of LiDARs available? What are the key LiDAR specifications that can be used to evaluate their performance? In this blog, we will demystify the defining specifications of LiDARs and give some examples of the technology’s potential applications and uses.

LiDAR specifications explained

- LiDAR Detection Range

- Range Precision and Accuracy

- Field-of-View (FoV)

- Scan Pattern

- Cross Talk Immunity

- LiDAR Detection Rate

- Multiple Returns

LiDAR Detection Range

Among the plethora of LiDAR specifications, the detection range is probably the most prominent one by which the sensor is measured. It refers to the farthest distance where it can detect an object. This largely depends on the power of the laser source; the higher the power, the farther LiDAR can detect an object. The maximum laser power allowed is limited by eye safety regulations. Other factors that determine the LiDAR range involve both, specifications such as type of laser and aperture size, and properties of the reflecting object e.g. size, distance, reflectivity, and diffusion or specular reflection as well as external influences like the weather and temperature.

_

_

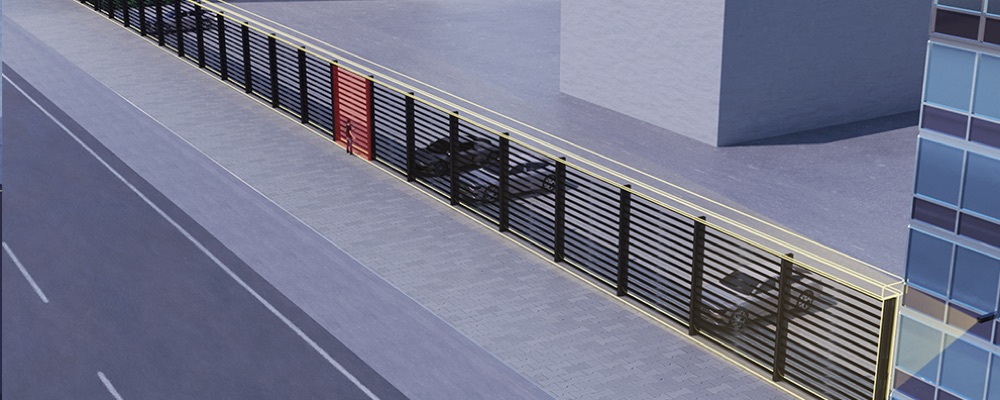

An example where a long detection range can be important is in an intrusion detection system. A LiDAR can be used to monitor intrusion, where it can be installed along a wall or fence to detect any object that enters the predetermined area. The algorithms also allow for object classification, making it possible to issue an alert only if a particular criterion is met. But LiDAR’s detection range can still be a limiting factor. If the wall is particularly long and the LiDAR’s detection range is limited, there would be a need for multiple LiDAR sensors to cover the whole perimeter for fool-proof security. Therefore, a long-range LiDAR is desirable to make the system easier to manage and also economically viable.

The Detection Range of a LiDAR describes the farthest distance where it can detect an object.

The factors that determine the range can roughly be separated into three different categories:

– properties of the LiDAR (e.g., type of laser, power of the laser source, aperture size)

– external influences (e.g., rain, fog, snow, sunlight)

– properties of the object (e.g., size, distance, reflectivity, diffuse or specular reflection)

Blickfeld’s Cube product family has an exceptionally large range for a MEMS-based LiDAR. This is due to the proprietary design of the mirror that is more than 10 millimeters in diameter, and therefore also has a large aperture. This allows a high proportion of reflected photons to be directed onto the photodetector, allowing the sensors to reliably detect even weakly reflecting objects at a large distance.

LiDAR Range Precision and Accuracy

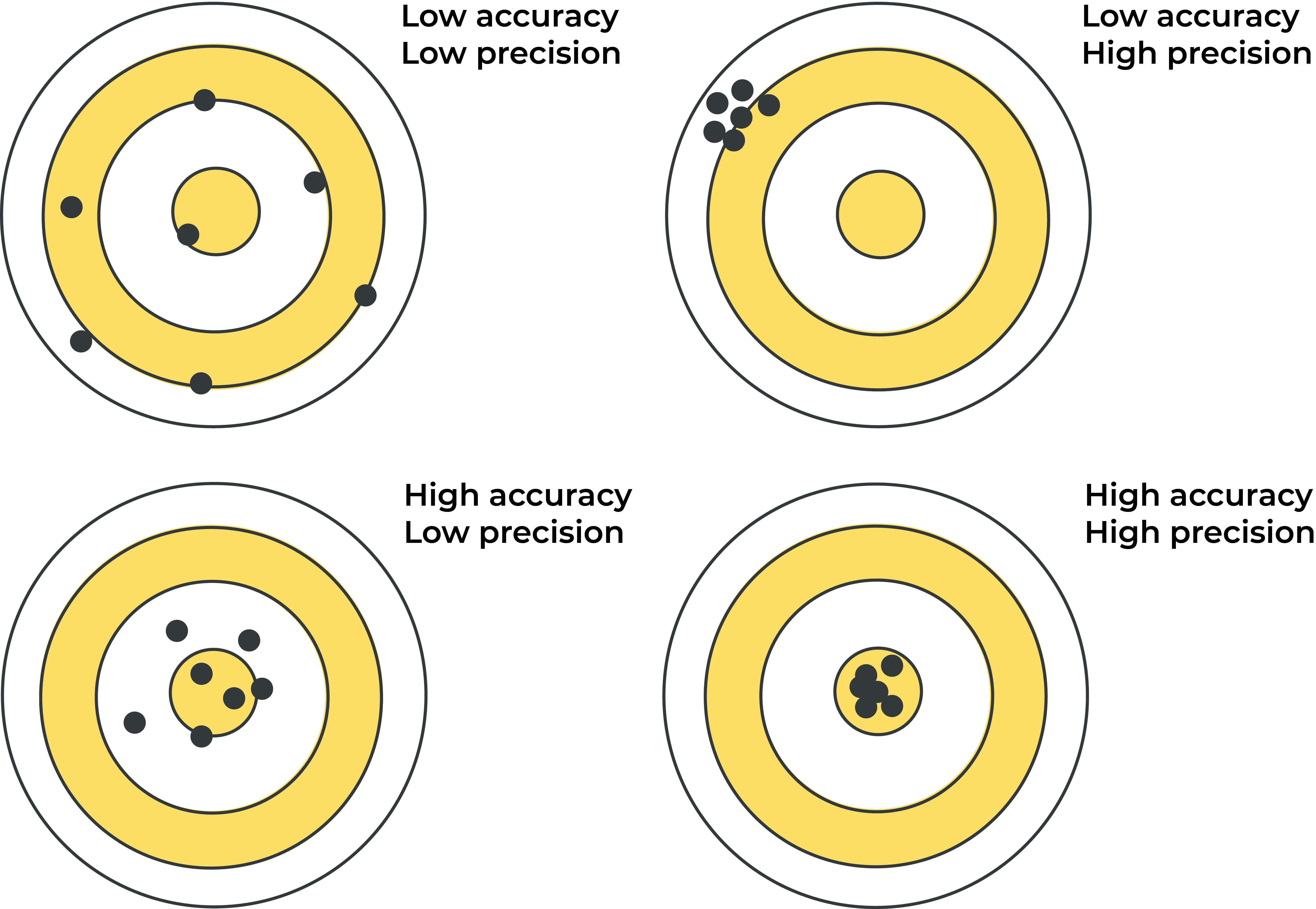

Range precision and accuracy are key LiDAR specifications, and distinguishing between them is important as they often get confused with each other.

LiDAR Range precision

Precision is a measure of the repeatability of LiDAR specifications. High precision means that repeated measurements of the same target will be very close to the mean value, and similarly low precision means a large scatter of the values around the mean.

How to Calculate LiDAR Range Precision:

To determine LiDAR range precision, multiple range measurements are performed on the identical target and environmental conditions, i.e. several frames of the same scene are recorded. Then the mean value of the measured range values to a given object is calculated and subtracted from the border-line values. Finally, the root mean square (RMS) of these values is calculated, which yields a value that is comparable to the standard deviation of the range precision.

Range precision is crucial for applications such as speed-camera readings, where the vehicle’s speed has to be calculated using the distance between the LiDAR and the moving target within a short time interval.

Range precision depends on the distance between the sensor and the target and the characteristics of the target, such as reflectivity and the angle of attack.

_

Precision is a measure of the repeatability of LiDAR specifications. High precision means repeated measurements are close to the mean value.

LiDAR Range accuracy

Accuracy defines how close a given measurement is to the real value, i.e., proximity of the measured target distance to its actual distance. For a range accurate LiDAR, distance values should be very close to the actual distance and within the specified range accuracy.

LiDAR range accuracy would be useful in applications that include measurements of absolute distances, such as volume measurements. Or when used in drones to generate elevation maps, high accuracy will be critical in identifying the topography underneath. This data can be further processed to create 3D models of the crops and monitor drought or different growth stages to optimize water use.

_

Accuracy defines how close a given measurement is to the real value and a range accurate LiDAR distance values would be very close to the actual distance.

Field-of-View (FoV)

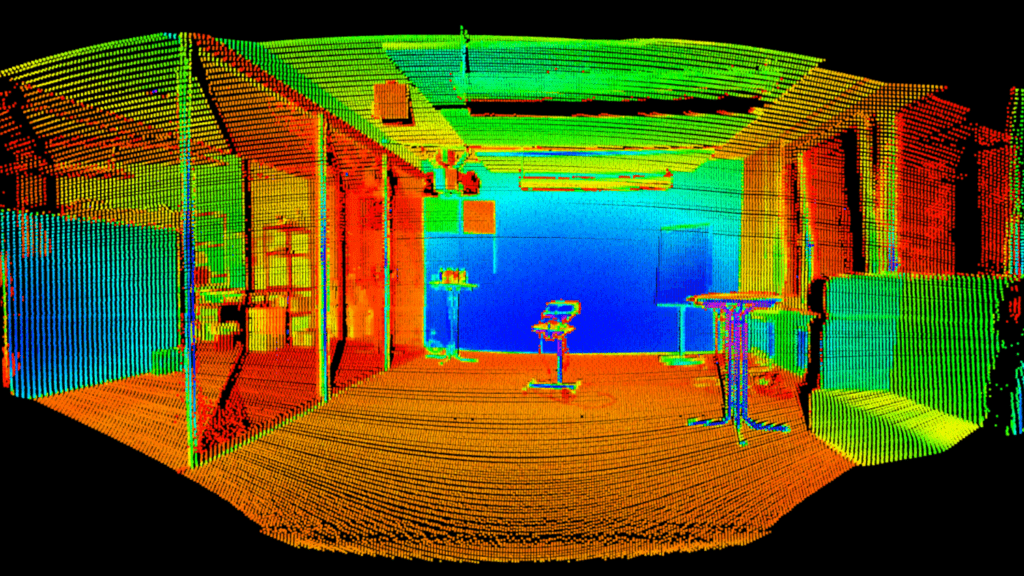

The field-of-view is the angle covered by the LiDAR sensor, or the angle in which LiDAR signals are emitted. It varies significantly depending on the LiDAR technology. Spinning LiDARs, for example, usually generate their field of view by mechanically rotating the 16 to 32 stacked laser sources, allowing them to offer a 360-degree view of the surroundings. The less complex and therefore less expensive while more robust solid-state scanning LiDARs, use fewer lasers, in Blickfeld’s case only one, that strike one point at a time. In order to illuminate the field of view point-by-point, the beam is deflected, or “scanned”.

The Blickfeld LiDAR has an outstanding flexibility when it comes to configuring the field-of-view. Assuming that the amount of laser signals emitted per scan cycle is always the same, reducing the angle, and thus the vertical FoV, will result in a denser point cloud whereas increasing the vertical FoV will spread the LiDAR returns farther apart. As for the horizontal FoV, the point spacing can be changed while maintaining the same FoV.

The field-of-view is the angle in which LiDAR signals are emitted.

The FoV varies significantly depending on the LiDAR technology. FoV requirements change according to the needs of the application as well as many other factors, such as the type of objects to be scanned or their surface properties.

FoV requirements vary according to the needs of the application as well as many other factors, such as the type of objects to be scanned or their surface properties. For example, a dense forest canopy needs a wider field of view to obtain returns from the ground. On the other hand, application scenarios in cities with high buildings and narrow streets will prefer a narrower angle to get returns from the street level.

_

_

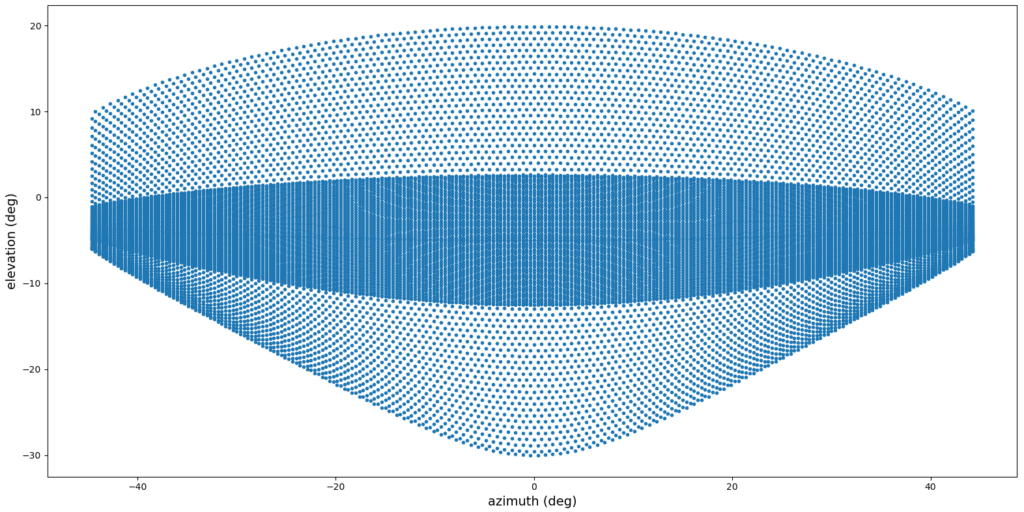

Scan Pattern

From the long list of LiDAR specifications, the scan pattern is the most important and interesting one to consider for scanning LiDARs. Scanning LiDARs have beam deflection units or scanner units that deflect the laser beam in different directions to perform ranging measurements, creating unique patterns in the point cloud. These patterns show different characteristics such as number of scan lines or point density. Depending on the application the LiDAR will be used in, the features of the scan pattern can be important. For example, in a people counting application, depending on the number of people present in a certain area, a high-resolution point cloud can be crucial. In order to achieve the required resolution, a high number of scan lines is needed.

_

What makes Blickfeld’s sensors special is that the scan lines that comprise the scan pattern can be easily customized, even while using the LiDAR. The sensor can be configured as per the application and its differing needs, e.g. a switch from a general view to a high resolution can be made seamlessly by reconfiguring the scan line density.

Scanning LiDARs deflect the laser beam in different directions to perform ranging measurements, creating unique patterns in the point cloud called the scan patterns. These patterns show different characteristics that enable different applications.

_

Cross Talk Immunity

In many real-time applications where multiple sensors may appear simultaneously, cross-talk immunity is another LiDAR specification of critical importance. For example, the LiDAR sensor on an autonomous vehicle could pick up laser signals from another vehicle’s LiDAR sensor within its field of view, leading to false detection. Consequently, an obstacle on the road, for example, could be falsely detected, causing unnecessary and potentially hazardous emergency breaking. If the parasitic echo is strong enough, it could distract the LiDAR from overlooking an object, presenting an even more significant safety hazard. Sunlight also presents a great challenge, as it creates noise and reduces the reliability of the signals as well as the detection range. There are several methods that ensure that cross-talk doesn’t affect LiDAR sensors. Blickfeld has employed two of those:

Spectral filtering

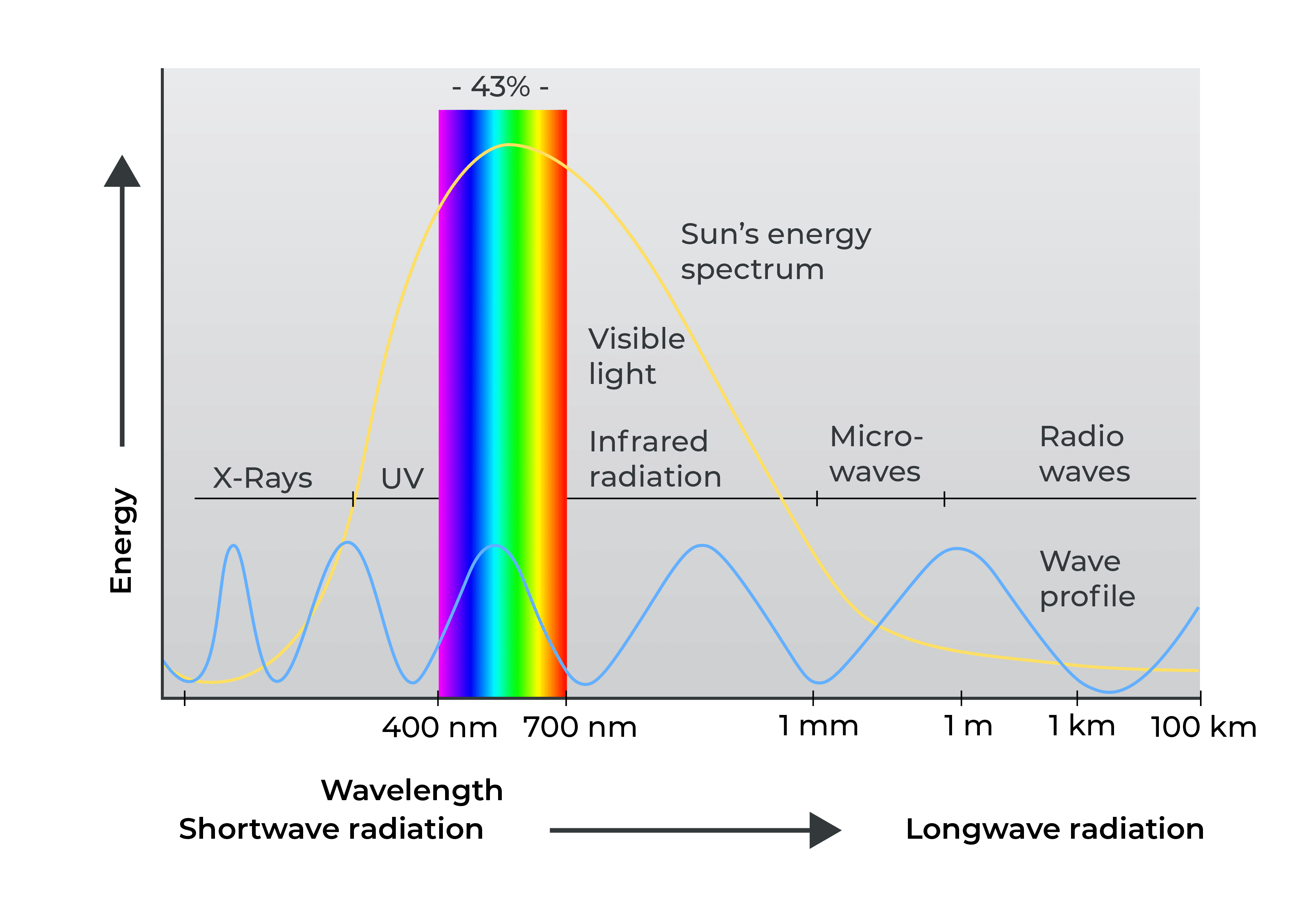

Current LiDAR systems usually use one of two LiDAR wavelengths: 905 nanometers (nm) and 1550 nm. Each LiDAR wavelength comes with its own pros and cons, which must be considered before opting for one or the other. Many LiDARs, such as the Blickfeld Cubes, operate at a wavelength of 905 nm. This is close to the electromagnetic spectrum’s infrared region. It also has a filter in front of the detector that only allows the electromagnetic waves of a similar wavelength to pass while blocking others. Thus, the Cubes don’t respond to the lasers from other LiDARs that operate at different wavelengths, e.g., 1550 nm. However, the detector is still prone to getting tricked by signals from another laser operating at 905 nm. To counter this, a technique called spatial filtering is employed.

Spatial filtering

The coaxial design of the Blickfeld product portfolio allows the sensors to receive the reflection of a laser via the same path as the emission through the beam deflection unit. This ensures that the detector only captures the photons sent into a specific direction and is ‘blind’ to others coming from different directions.

Essentially, another LiDAR sensor would have to send a laser pulse at precisely the same angle but opposite direction and also the right time window for the Cube to detect and create a false echo. The likelihood of this depends on a score of factors such as the distance between the LiDARs, scanning rates, beam divergence, and relative orientation, making the error highly unlikely.

Spectral and spatial filtering, for example, also help reduce the noise created by the sunlight and minimize the impact on range performance, which is a momentous challenge for LiDAR sensors.

_

Crosstalk occurs when multiple sensors emit signals that are received by the LiDAR that was not the sender, leading to false echo detection. Crosstalk can be minimized by measures such as spectral and spatial filtering.

_

Detection Rate

The detection rate (DR) or true-positive rate (TPR) is the proportion of frames where a selected point on a real target is detected. On the contrary, the false-positive rate (FPR) measures the proportion of frames where an echo in the point cloud is detected despite no real physical target.

False detections are undesirable as they reduce the point cloud’s accuracy and thus diminish the reliability of object recognition. In our intrusion prevention example above, if the LiDAR detection rate is poor, there will be an increased number of false alarms in surveillance applications, making the results unreliable.

The detection rate (DR) or true-positive rate (TPR) is the proportion of frames where a selected point on a real target is detected. False detections are undesirable as they reduce the point cloud’s accuracy and thus diminish the reliability of object recognition.

_

Multiple Returns

LiDARs usually receive more than one reflection after sending out the beam as it widens or diverges over an increasing distance. So, while part of the beam hits the closest target, some of it may hit a target located at a further distance. This means that the beams will return at different instances, thus registering multiple returns.

If the LiDAR can only analyze a single return, it would only display one target defined by either the algorithm or intensity of the reflection. Usually, only the target closest to the sensor will be recorded, while the target behind remains undetected.

LiDARs that can handle multiple returns can detect information also on targets that are partially obstructed by objects. This increases the amount and depth of data gathered with the same number of emitted laser pulses.

A typical use case would be to detect foliage in a forest from above, where the first return would be the reflected beam from the treetops. A couple of the same laser beams might hit some branches along the way and get reflected, and another part of the laser beam might hit the ground and return. This will result in multiple returns, and the LiDAR would potentially register three different distances. In this scenario, the first one usually is the most significant return as it detects the highest feature in the landscape, the treetop.

_

A beam sent by LiDAR usually widens or diverges over an increasing distance, and may hit different targets which consequently leads to multiple return at different instances. LiDARs that can handle multiple returns can detect partially obstructed targets, which increases the amount and depth of data gathered.

LiDAR sensors are at the forefront of the technological revolution and enable a host of applications. They come in all shapes and sizes – and most importantly on the basis of different technologies. Understanding the different specifications is therefore pivotal for selecting the suitable sensor for a specific application.

_