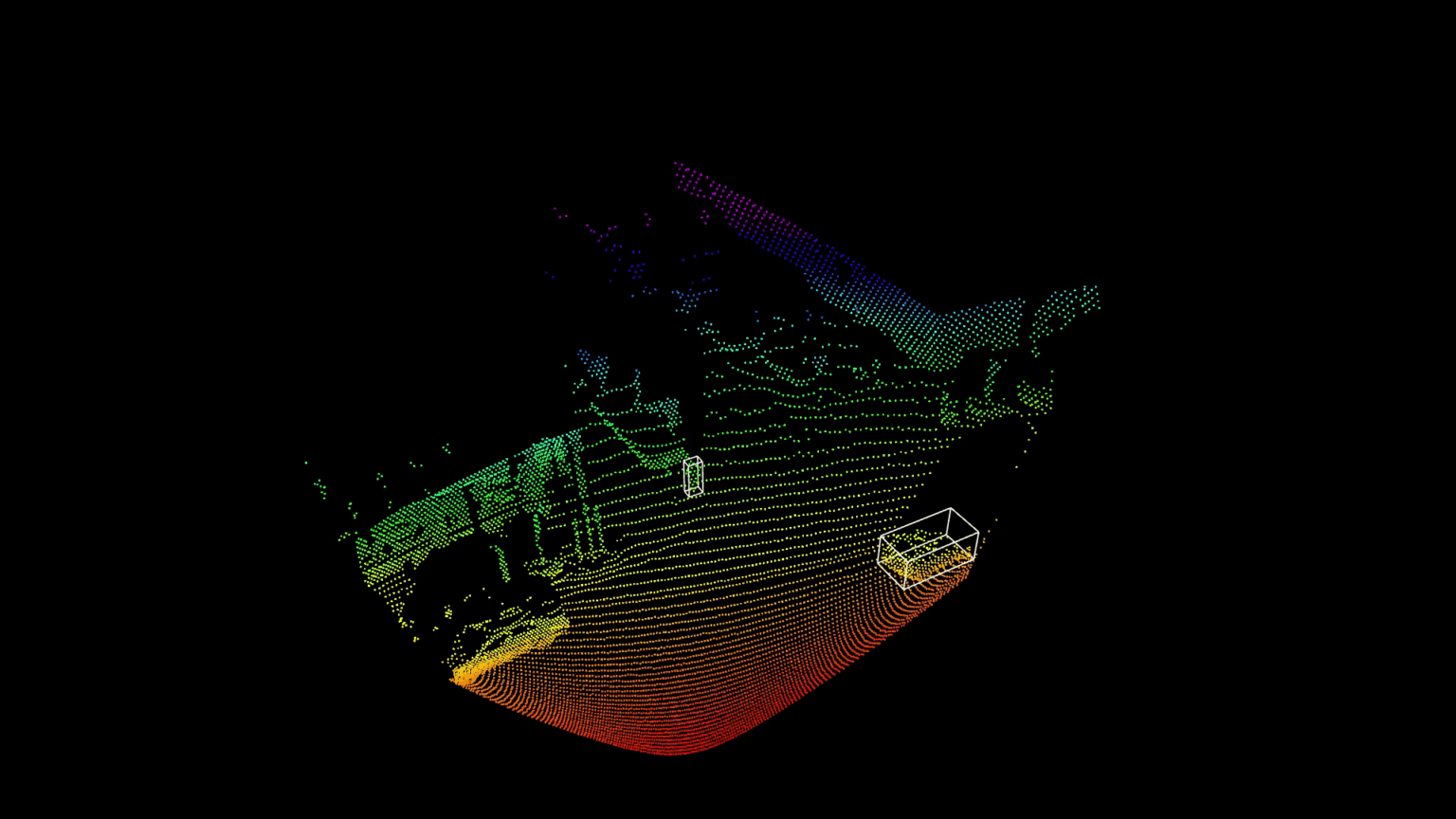

LiDAR data are shown as “point clouds”, which is a fascinating representation. It is an exact 3D image of the environment captured by the sensor. Its three-dimensional character allows a huge variety of vantage points, and the scene can be looked at from various perspectives.

But users don’t use LiDAR sensors because they like beautiful images. The data deliver a wealth of information that allows countless uses, from people analytics to volume measurement to security applications. People don’t spot the necessary information in the pretty point cloud at first sight, but algorithms do a good job of interpreting them.

This article explains how objects are detected and tracked.

w

Blickfeld Percept

To work with raw LiDAR data in general, users must know how to handle 3D data. Because not everybody does, we at Blickfeld have developed a software product that extracts actionable information from LiDAR-generated data. Blickfeld Percept is simple and intuitive to use and enables applications from the fields of crowd analytics, security or volume monitoring.

w

Object detection and tracking for recording walking paths

Imagine a market square where a street fest is going on. Various booths have been built where products, food and beverages are being sold. The organizers would like to know how visitors are moving around the square. Based on this info, they can evaluate which positions were good choices for the sales booths and which offerings are especially popular. For one thing, these insights help booth operators by indicating how appealing their look is and whether they should optimize it. They also give the organizers information on how to price which booth sites based on foot traffic.

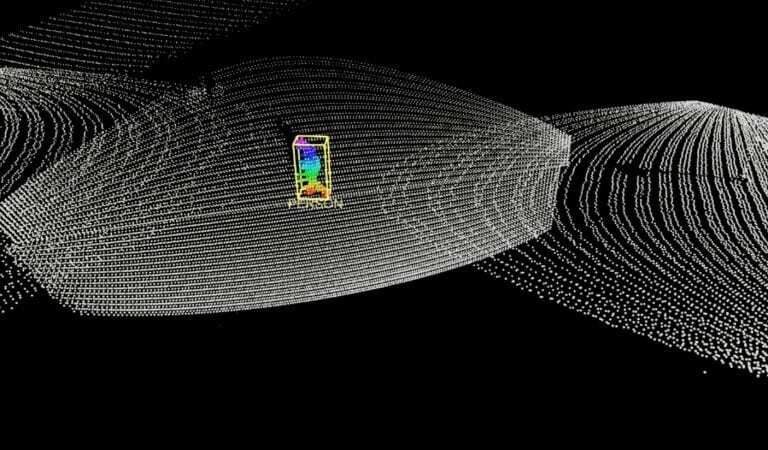

Distinguishing background from foreground to reduce data transfer

To record visitors’ movements at the festival, they need to be detected in the point cloud. The point cloud itself consist of the entire scanned environment in 3D points, meaning that objects need to be distinguished from everything else. In a first step, objects describe everything: the visitors, as well as vehicles, banks, strollers, and even dogs.

Since the objects that are to be detected in our example are people moving around on the market square, they are distinguished from the rest by detecting all moving points. This is not necessary for object detection, but it reduces the quantity of data to be transferred and is therefore an advantage in many cases. However, objects can also be detected in completely static point clouds.

Moving points are detected within the point cloud by making a reference recording at the beginning of the measurement period. Everything that is visible on this recording and is not moving is defined as background. This can be filtered out, so that the amount of data to be transferred is drastically reduced. Indoors, this process usually is sufficient, because it can be assumed that the background won’t be changing. To account for future changes in the background (e.g., a market stall moving away), it is continuously identified and updated during operation, and the background is dynamically subtracted. This is done by adding objects that do not move within a predefined period to the background.

Which points belong to an object?

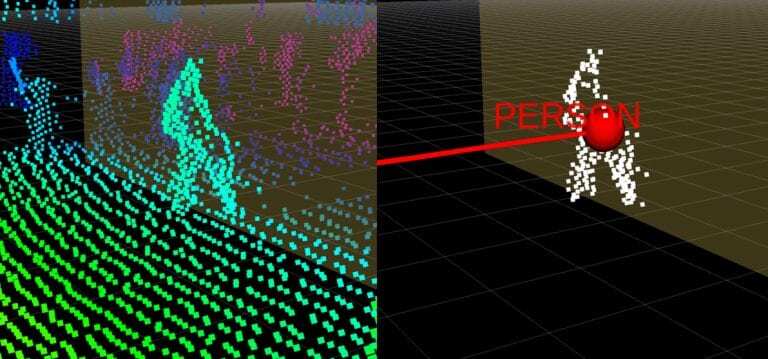

Once everything that is not of interest for object detection has been subtracted from the point cloud by removing the background, all that’s left is the foreground, and therefore the objects. They are defined through “clustering” or segmentation: The moving points in the point cloud are detected, and the distance between several points is measured. Points that are close to each other are clustered into one object. This process works exactly the same in a static point cloud in which the objects don’t move.

In a next step, the objects are marked with a bounding box and appear in the object list. This type of information is simple to process further and can be easily integrated into existing architectures. Setting up certain rules on the size and shape of the object enables the identification of the type of object. In our example, if people are being detected, only cylindrical objects within a specific height spectrum are counted and marked as people. Similarly, cars can also detected by establishing specific parameters.

How do the objects move?

To capture a visitors’ walking path, the detected object is now tracked. This is done by anticipating where the object will be positioned in the next frame, based on the previous trajectory of the object and on probability models. For example, if a person is walking from left to right at a speed of one meter per second, it is anticipated that he or she will continue at the same speed in the established direction and will be located at a specific spot in the next frame. The objects detected in the next frame are then assigned to the objects based on movement predictions and are thereby tracked.

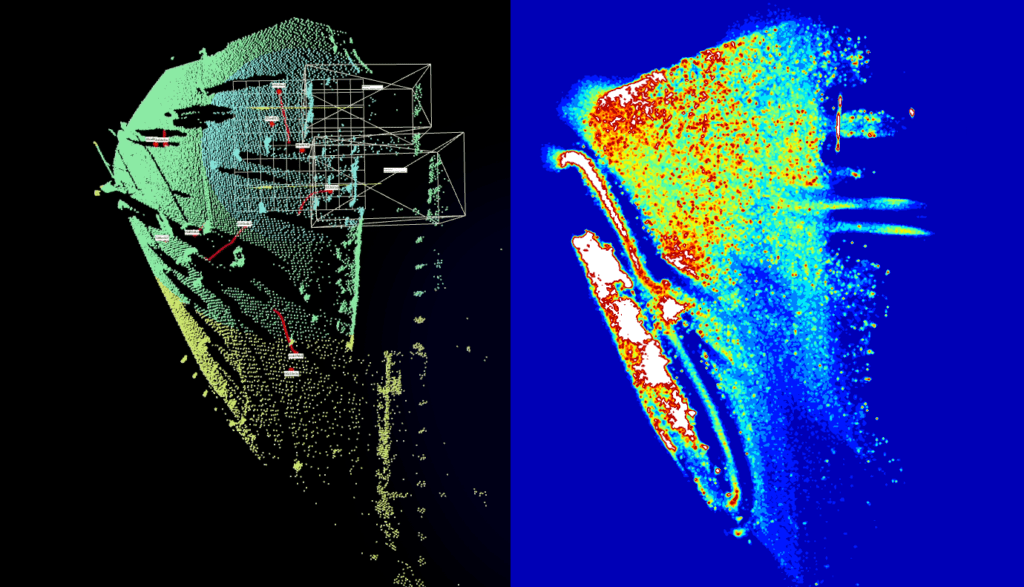

Graphic representation in a heat map

By recording visitors’ walking paths, the presence at individual booths is analyzed and a “heat map” is created. It shows which locations drew the greatest number of visitors and were therefore the most popular. For the next event, the organizers can consider this information for location assignment.

w

Heat maps are also very informative in trade shows, for example. By recording visitors at a booth and analyzing their routes, LiDAR sensors can clearly identify which exhibits were of particular interest to the visitors – and which might not have been. During the event, this information can lead to changes in staffing or even to booth design, and in any case these insights can be incorporated into subsequent booth planning.

w

A basis for further insights

Detection and tracking of objects are the basis for further forms of analyzing point clouds that enable applications, such as people counting, occupancy detection or entry detection.