LiDAR sensors have proven to be a key technology for many sensor-based applications, but they have also attracted many unwarranted misconceptions and myths. Here’s the second part of the blog series, which addresses and debunks some more common myths about LiDAR. You can read the first part here.

1. Myth: LiDAR is the same as a camera in terms of privacy

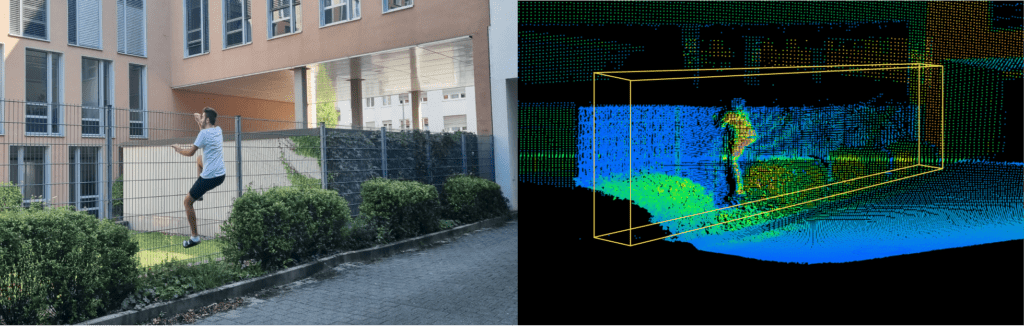

Unlike cameras, LiDAR doesn’t record any color information. It only captures the 3D distance data to create the point cloud, thus generating an anonymized picture of the entire scene while protecting privacy.

As the usage of sensors has risen over the years, so have privacy concerns. For instance, smart city projects worldwide have stirred controversy over data collection and use, so much so that the European Union has even been considering a ban on facial recognition technology in public spaces until the authorities can study and regulate its use.

These concerns primarily revolve around the sensors being able to capture and store pictures of people and then using the data for identification through facial recognition. While the debate on the merits of using surveillance and identification applications is indeed a challenging social enigma, LiDAR technology is primed to play a pivotal part in offsetting the privacy infringement concerns.

LiDAR can track pedestrians, vehicles, and other objects with a great degree of reliability and accuracy using algorithms that analyze the point cloud data. But it doesn’t record any color information and only captures the 3D distance data to create the point cloud. Thus, it generates an anonymized picture of the entire scene, making the sensor invaluable in privacy-sensitive applications like perimeter security, crowd management, and people counting.

You can read more about how LiDAR sensors capture and process the data while maintaining complete anonymity for the people in this blog post.

2. Myth: LiDAR isn’t safe for the human eye

One of the common myths about LiDAR is it is not safe for human eye. On the contrary, all LiDAR products are manufactured following the Class 1 eye-safe (IEC 60825-1:2014) standard, which ensures eye safety.

Eye safety for LiDAR is usually based on a combination of factors and not just the laser’s wavelength. For instance, the safety rating of a LiDAR depends hugely on the peak power for the laser, which then directly affects the range of the sensors for a particular wavelength. In general, eyes are more sensitive towards the 905 nm wavelengths lasers. Therefore, this particular type of laser is operated with low peak power to remain within the eye-safe region.

In contrast, LiDARs operating with the 1550 nm wavelength range can safely employ higher power thresholds and have longer ranges than 905 nm lasers while remaining within the eye-safe region. This is because the eye’s cornea, lens, and aqueous and vitreous humors effectively absorb any wavelengths greater than 1400 nm, alleviating the risk of retinal damage at longer wavelengths.

Importantly, these eye-safe combinations of peak power in relation to the wavelengths are defined by the Class 1 eye-safe (IEC 60825-1:2014) standard, which is binding upon every laser manufacturer of wavelength range 180 nm to 1 mm and thus guarantees safe operations. By following these regulations, every LiDAR can be eye-safe!

There have been many online discussions on the possibility of multiple LiDARs sending out waves at the same wavelength and phase, e.g., at a road intersection. Could they combine and create a higher energy laser and that is not eye-safe?. Theoretically, these lasers can constructively superimpose and increase in amplitude, meaning the peak power (amplitude) of the pulses can increase and possibly go beyond the eye-safe region.

As disconcerting as that might sound, it is virtually impossible in the real world. This is because the other LiDAR sensors would have to send a laser pulse with a perfectly aligned combination of factors such as the pulse duration, divergence angle, and exposure direction with respect to the human eye’s position for this high-energy laser to be generated. This makes it highly unlikely for any two or more LiDAR waves to overlap at one point in space and time.

3. Myth: iPhone LiDARs and large scale LiDARs have similar functionalities

There are indeed significant differences in technology, performance, and application scope between the LiDAR used in the iPhone and professional LiDAR systems such as the Blickfeld Qb series.

Since Apple first introduced LiDAR sensors in consumer devices like the iPad Pro and iPhone 12 Pro in 2020, the technology has evolved, particularly in the areas of camera enhancement, augmented reality (AR), and spatial depth detection. Yet for all its appeal, it’s important to note: the iPhone’s LiDAR is not a substitute for industrial-grade scanning LiDAR systems used in sectors such as automotive, security, logistics, or smart cities.

The iPhone LiDAR sensor relies on Flash LiDAR technology. This approach illuminates the entire field of view simultaneously with a wide, pulsed laser beam. The system then measures the time of flight of the reflected light to determine the distance to surrounding objects.

In contrast, industrial LiDAR systems – such as those developed by Blickfeld – often use Scanning LiDAR technology. A tightly focused laser beam is directed systematically across the field of view. This enables significantly higher range and point density, resulting in highly detailed 3D representations of complex environments.

The most striking difference between consumer-grade and industrial LiDAR is range. Industrial applications demand far greater range performance than what the iPhone offers. Resolution is another critical factor: Scanning LiDARs deliver several hundred scan lines per second, producing dense point clouds suitable for high-precision environmental modeling.

Apple has undeniably played a major role in raising awareness of LiDAR as a key technology by integrating it into its consumer devices. The iPhone LiDAR enhances autofocus in low light, improves AR experiences, and enables new capabilities for mobile applications.

However, when it comes to industrial use cases, where reliability, range, resolution, and environmental robustness are essential, the iPhone LiDAR remains an impressive, yet not comparable, tool. It is part of a growing LiDAR ecosystem, but simply fulfills a different role within the value chain.

4. Myth: LiDARs have very limited applications

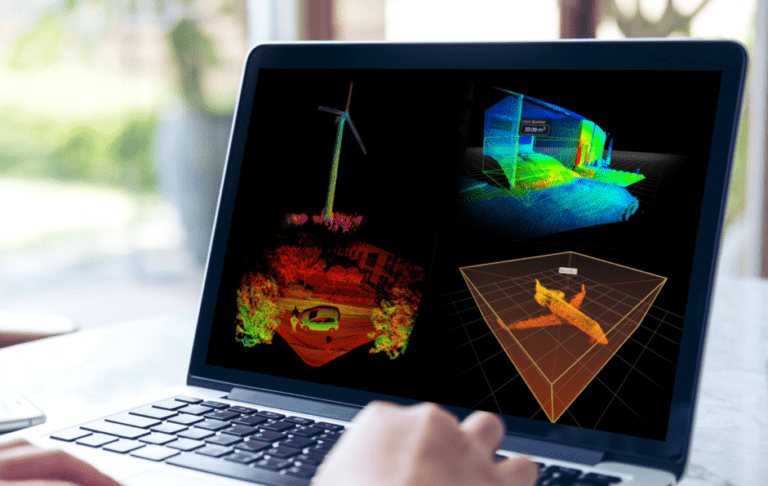

From fleet management to agriculture and security to smart city applications, the sky’s the limit for LiDAR applications!

Although the integration of LiDARs in iPhones has already started to challenge this notion, there are still some myths about LiDAR that it is somewhat a niche technology and has only a handful of applications. This could be attributed to how LiDARs are generally only associated with autonomous driving in the mainstream press and discussions about the sensor. While it is true that autonomous driving is inconceivable without LiDAR sensors, they also have many other applications touching all walks of life.

For example, in critical infrastructure environments, such as airports, power plants, or industrial facilities, LiDAR is used for invisible yet highly precise perimeter monitoring. Unlike conventional cameras, LiDAR operates independently of lighting conditions, reliably detecting objects even in darkness or fog, and can differentiate between humans, animals, and vehicles. This enables automated alarm systems to trigger with minimal false positives.

In construction, mining, and waste management, LiDAR plays a key role in accurate volume measurement of stockpiles, tailings, or waste bunkers. LiDAR systems capture the exact geometry of material heaps down to the millimeter – offering a significant efficiency boost over manual measurements or lower-resolution imaging. The resulting precise 3D data allows for accurate billing, optimized inventory logistics, and improved workplace safety.

In agriculture, LiDAR sensors assist in automated and autonomous maneuvering of farming machinery, detecting environmental conditions, and monitoring tasks such as seeding and fertilizing. These systems enhance productivity while supporting the move toward precision farming.

In urban environments, LiDAR supports the development of intelligent traffic management systems. Pedestrian flows, vehicle movements, or bicycle traffic can be tracked anonymously to dynamically control intersections, mitigate accident-prone zones, or align infrastructure with real-world mobility patterns.

Other LiDAR applications include integration into drones, delivery robots, as well as systems for people counting and crowd control.

These examples highlight just a few of the common misconceptions and myths surrounding LiDAR technology and its use cases. The importance of LiDAR sensors for the future of technology is undisputed. And as the world continues its shift toward automation, LiDAR and its applications will undoubtedly become a more frequent and essential part of the technological conversation.