LiDAR data processing for object detection

LiDAR sensors produce point clouds that map the environment in 3D. This “cloud,” consisting of distance points, provides numerous and valuable environmental information. For some applications, however, this information is too complex for further processing: Crowd management, traffic monitoring, or perimeter protection, for example, rather require a list of the objects detected in the field of view. How is this information obtained from LiDAR data? How does the LiDAR data processing work?

In order to figure that out, let’s take a look at an application case: The city of Lidartown is celebrating its annual city festival. Amongst other activities, there is a free concert being played on a big parking lot in the city center. Because it is free, spectator access is not regulated by tickets. However, due to security reasons, no more than 1000 people are allowed on the premises. To ensure this, the city has installed a LiDAR sensor that overlooks the entrance to the concert area and thus tracks who passes through the entrance. The entrance is a wide passageway through which visitors also leave the premises.

Precision and anonymity through 3D data

When it comes to this task, a LiDAR has several advantages compared to solutions such as cameras, motion sensors or manual counting by city employees. Firstly, it captures the scene reliably and in great detail in real time. Normal motion sensors, for example, have a problem generating accurate numbers as soon as several people enter the scene simultaneously. If, for example, three people are walking next to each other, it can happen that the two people walking outside are captured, but the person in the middle is not counted. Secondly, LiDAR protects the privacy of concert goers by recording colorless 3D data instead of camera images with faces.

How many people are on the festival site?

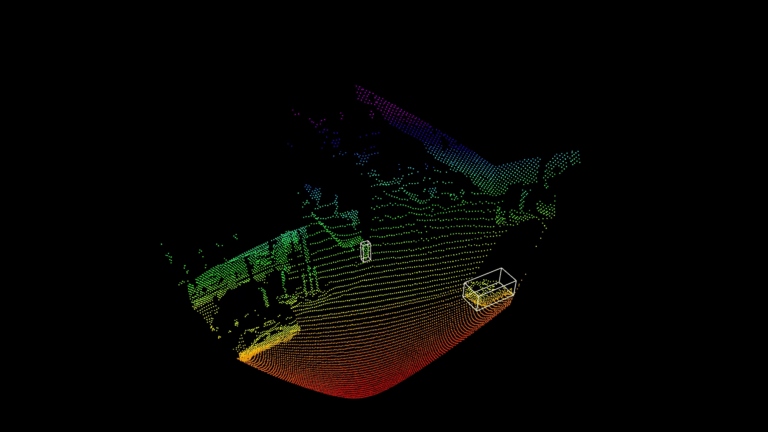

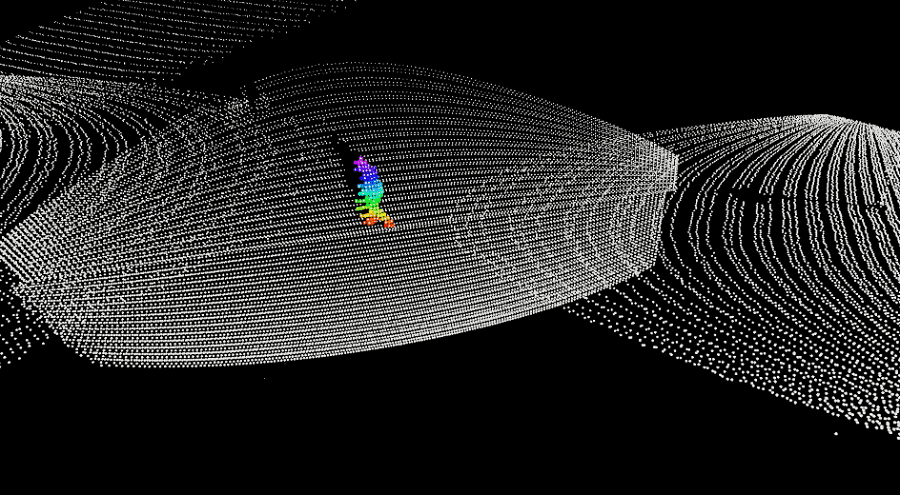

As admission begins, many people stream onto the site. During this process, the sensor collects millions of distance data, which are far too complex for further processing in regard to the visitor counting application. They contain x, y and z data for each recorded point and are composed in a point cloud. However, the organizer does not need this information in detail, but rather needs data that gives him information about the total number of visitors on the site. Basically, three pieces of information must be provided in real time: The organizer needs to know how many people pass through the entrance. The software recognizes these as objects in the point cloud. In addition, the information about the exact location of the identified person is required. Are the people already behind the entrance and thus on the premises or are they standing outside the boundaries, for example in the queue in front of the entrance? The third item of information required is that of the direction of movement of the people. This way it is possible to detect people who are leaving the premises again and are thus deducted from the crowd present.

Which points need to be considered?

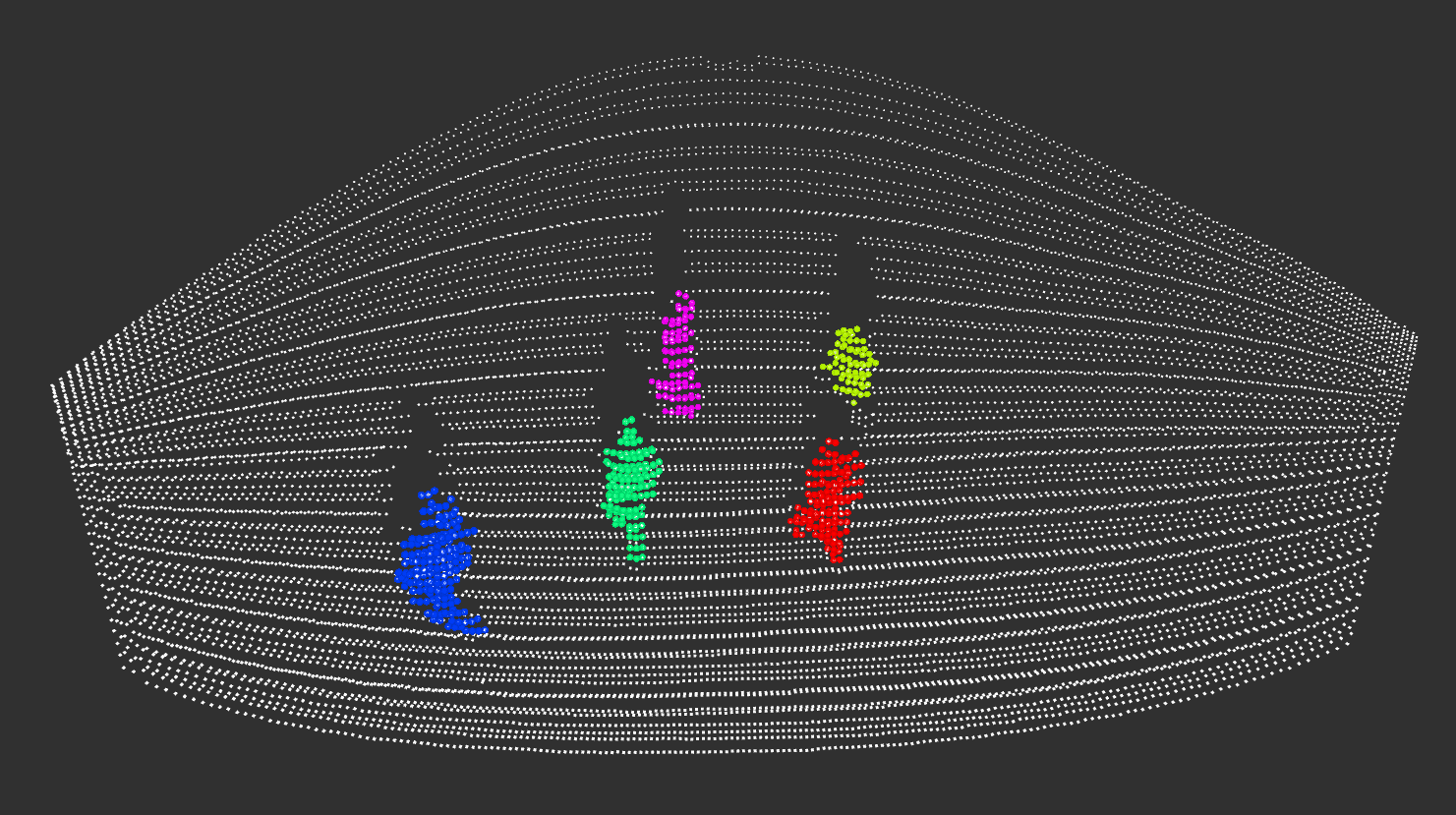

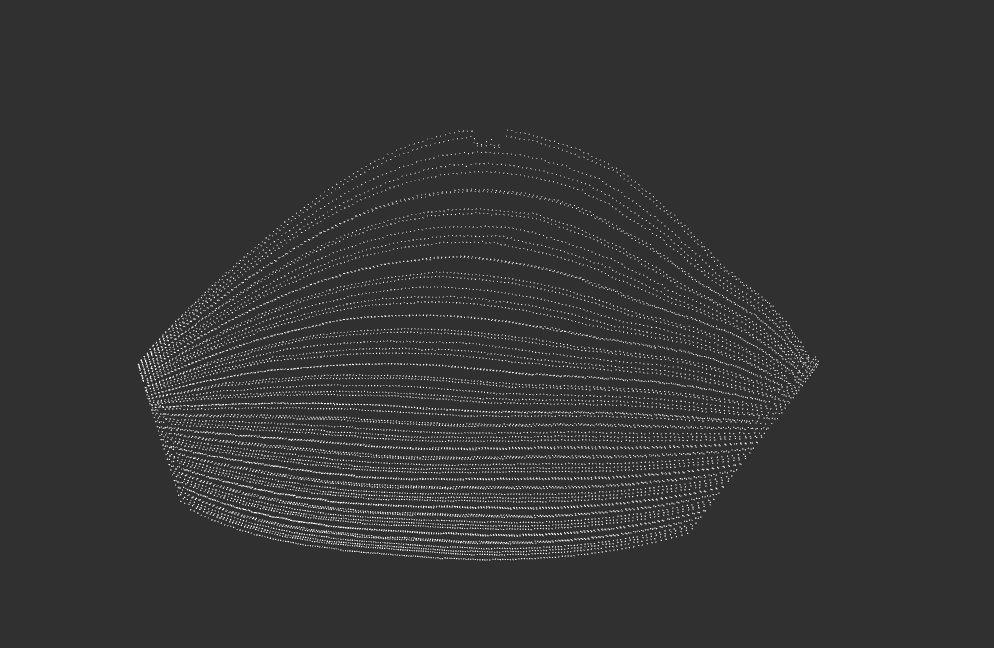

How exactly are these data obtained from the complex LiDAR point cloud data through processing? To understand this, we will look at a simplified algorithm for data evaluation. First, the foreground needs to be differentiated from the background. In order to do that, the scene is analyzed and the background is subtracted to identify point clusters. For this purpose, the static background is recorded in a point cloud before visitors arrive at the site. By recording the “status quo,” the software can determine which point clusters can be ignored because they are static and belong to the background. As soon as people enter the site, every image captured is matched with the captured background image to detect these same objects.

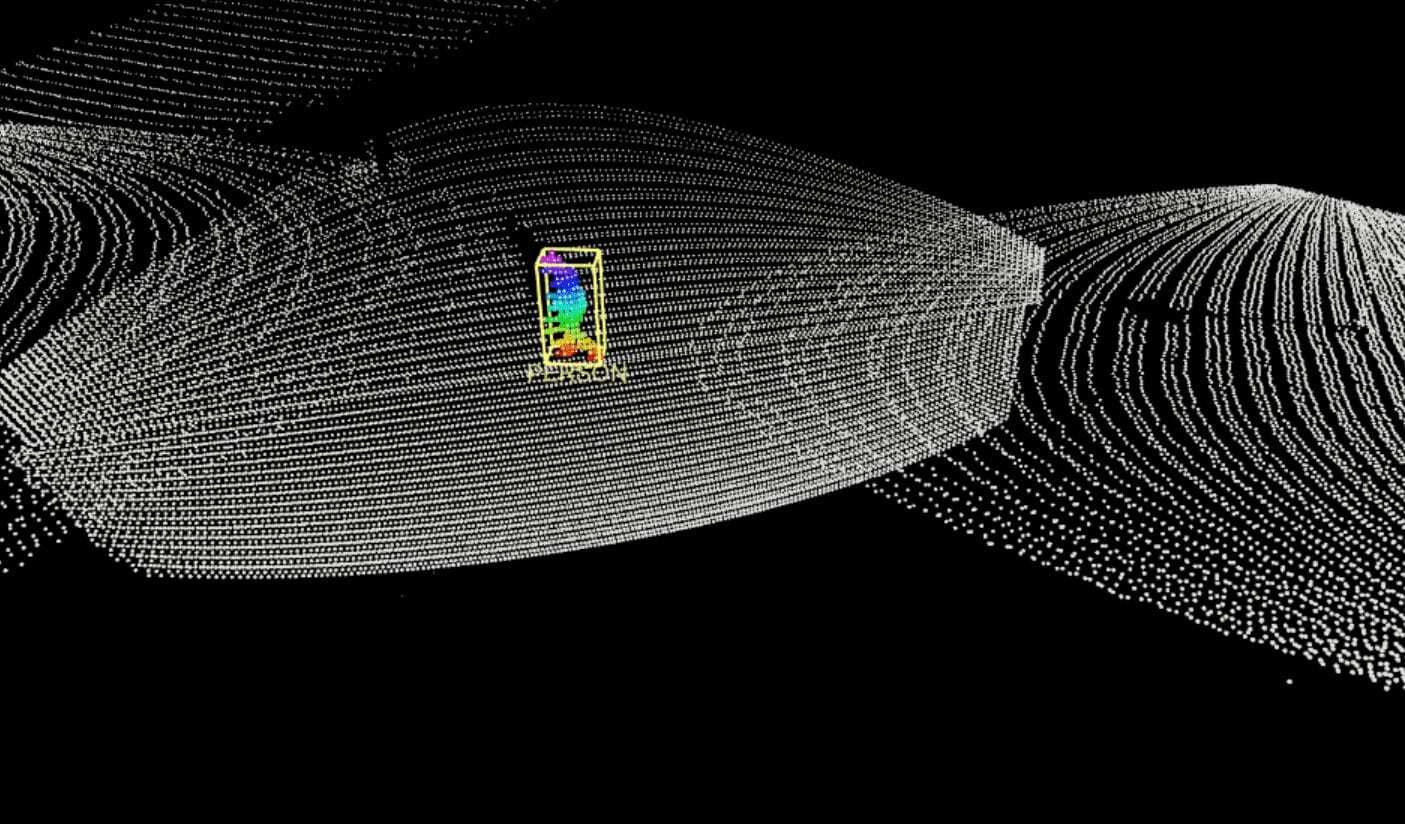

About hulls and boxes

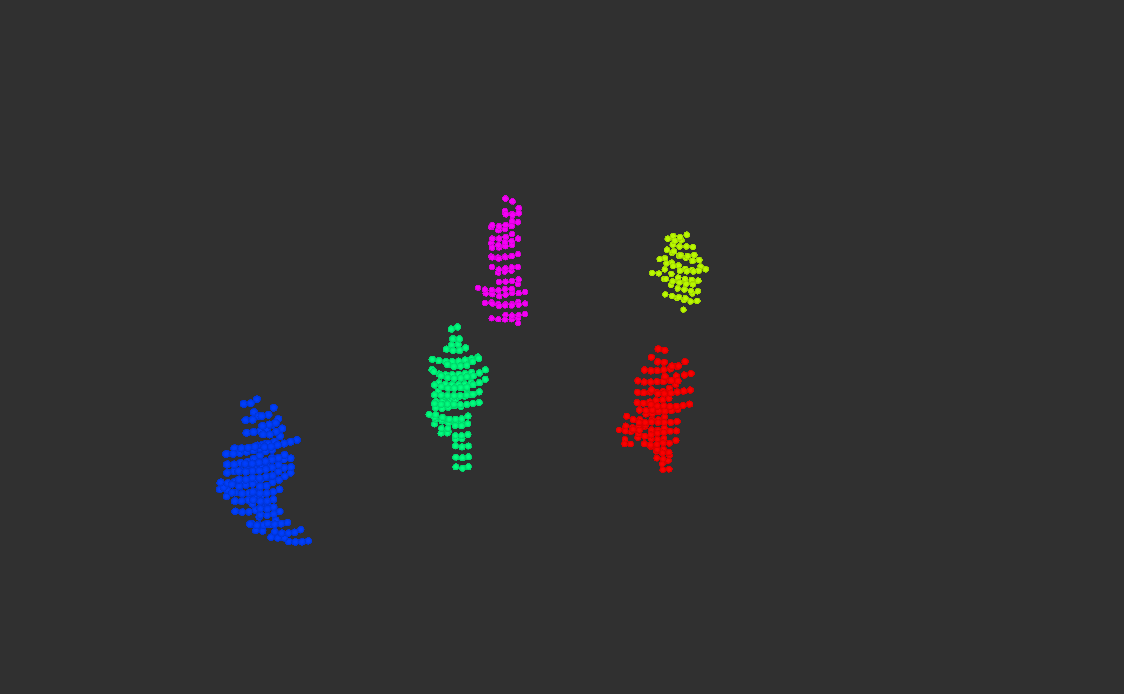

When visitors arrive, the point cloud changes and clusters can be recognized as new objects. If these are not present in the comparison with the background image, it is recognized that a moving object has entered the area. Following the principle of the convex hull, a so-called bounding box is now drawn around the object. The convex hull describes the smallest possible circumference of a body by connecting the outermost points of the object. The bounding box encloses the object in the smallest possible cuboid for better processing of the information. These measures are taken to reduce data and make it easier to handle.

The size of this bounding box helps in the rough classification of the object. In road traffic, for example, it is used to determine whether the detected object is a car, a bicycle or a pedestrian. In our example case of the city festival concert, the detection of the bounding box size can help to classify objects as human beings, even if bicycles or cars are unlikely to be present on the site. Thus, the software assigns the objects located in the entrance area to the rough category “human” and is able to determine the number of these objects.

How do people move?

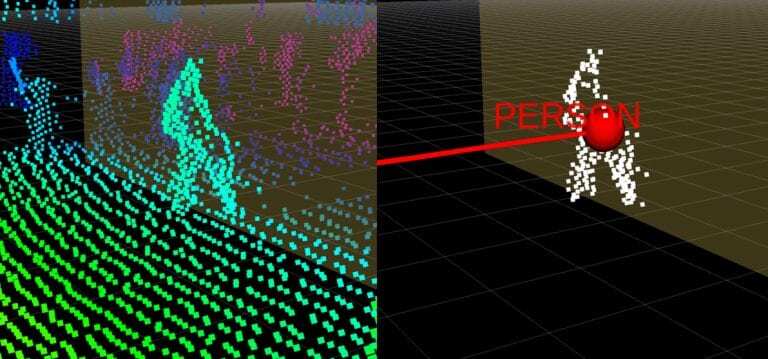

The size of the objects in the entrance area is thus identified, leaving us with the need of information on position and direction of movement. How are these data derived? For one thing, information about the exact position of people can be easily extracted from the bounding box, as this is the core of LiDAR information – LiDAR sensors ultimately measure distances.

On the other hand, the direction of movement can, for example, be determined by observing the position of the object in the previous frames. Since it is known at what rate frames are generated, it is easy to determine where and how fast the object is moving by comparing the position of the object between several frames. According to this speed and the general direction of movement, the further path of the object can be anticipated as soon as the same cluster of points has been identified in two or more frames.

The result: The object list

This information is made available in a so-called object list. This table records the detected object and its properties in each line – in our case the size, position and direction of movement. Data in this form can easily be processed further and, in the concert example, determine the exact number of people on the premises at any time. This enables the system to recognize when the maximum number of visitors has been reached and the entrance must be stopped. It is also possible to detect exactly when most people are on the site or when the crowds at the entrance were at their highest. This data can be useful for planning future events.

Regulated visitor flow thanks to LiDAR data processing

The city of Lidartown is very pleased with the use of LiDAR on the concert site. The software in our example triggered a notification to the organizers when the number of visitors exceeded 900. This enabled the event management to have a closer look at the number of visitors from then on, so that they could stop the admissions in time. The rest of the time they could pursue other tasks or just listen to the band – LiDAR kept an eye on the important things for them.